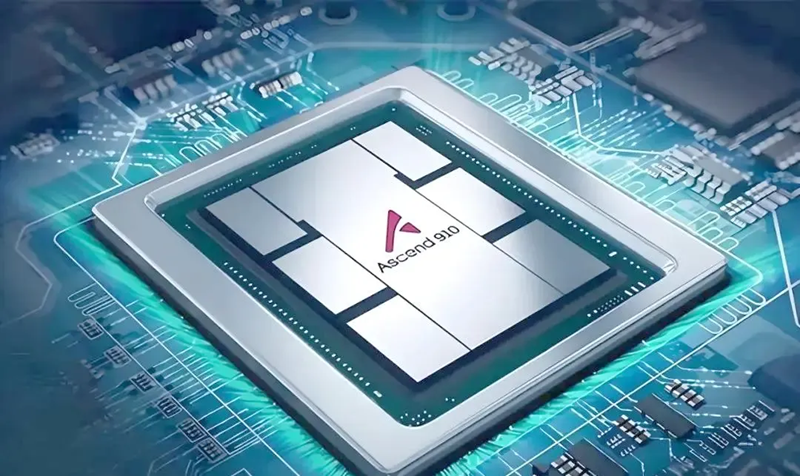

Huawei Unveils Ascend AI Chip Roadmap with In-House HBM Technology

9/21/2025 11:47:58 PM

On September 18, at the Huawei Connect Conference, Huawei announced its latest artificial intelligence processor development plan, aiming to provide powerful computing capabilities so that Chinese users no longer need to rely on overseas-supplied Nvidia products.

For the first time, Huawei publicly revealed the complete roadmap of its Ascend (昇腾) chips and launched the Atlas 950 SuperPoD based on the Ascend 950, as well as the Atlas 960 SuperPoD products based on the Ascend 950DT/Ascend 960.

Xu Zhijun (Eric Xu) noted:

For the first time, Huawei publicly revealed the complete roadmap of its Ascend (昇腾) chips and launched the Atlas 950 SuperPoD based on the Ascend 950, as well as the Atlas 960 SuperPoD products based on the Ascend 950DT/Ascend 960.

According to the company, the new SuperPoD technology can support the interconnection of up to 15,488 GPUs powered by Ascend AI chips, creating a mega-cluster with tens of thousands of cards.

Ascend Chip Roadmap

- Q1 2025: Ascend 910C

- Early 2026: Ascend 950PR

- Late 2026: Ascend 950DT

- Late 2027: Ascend 960

- Late 2028: Ascend 970

- The Ascend 950 integrates HiBL 1.0

- The Ascend 950DT upgrades to HiZQ 2.0

Performance Comparison

For reference, Nvidia's Blackwell Ultra GB300 delivers 15 PFLOPS (FP4), equipped with 288GB HBM3e and a bandwidth of 8TB/s.Xu Zhijun (Eric Xu) noted:

"Due to U.S. sanctions, we cannot tape out at TSMC, so the computing power of a single Huawei chip is not yet on par with Nvidia's. However, with more than 30 years of experience in connecting people and machines, we have heavily invested in interconnect technologies and achieved breakthroughs. This enables us to build mega-clusters with tens of thousands of cards, giving us the world's strongest computing nodes."

Product Highlights

- Atlas 950 SuperPoD

- Expected launch: Q4 2025

- Features: 8,192 NPUs

- Performance: 8 EFLOPS FP8

- Memory bandwidth: 16.3 PB/s

- Total training throughput: 4.91M TPS

- Atlas 960 SuperPoD

- Expected launch: Q4 2027

- Features: 15,488 NPUs

- Performance: 30 EFLOPS FP8 / 60 EFLOPS FP4

- TaiShan 950 SuperPoD

- Expected launch: Q1 2026

- Based on Kunpeng 950

- Supports up to 16 nodes (32P)

- Maximum memory: 48TB

- Supports memory/SSD/DPU pooling

Conclusion

Through self-developed HBM, a brand-new architecture, and ultra-large-scale clusters, Huawei is gradually building a complete and independent AI ecosystem. These advancements aim to provide China with stronger domestic solutions for high-performance computing and AI training.

Source of message data: Huawei